Installing and Configuring Apache Hadoop for Windows

As has been mentioned in previous posts, I’ve been spending a lot of time recently working with “Big Data” technologies and have been working more recently with Apache Hadoop and associated distributed computing and analytics mechanisms.

What Exactly Is Hadoop?

If you haven’t been exposed to it, Apache Hadoop is an open-source framework that enables distributed processing of very large scale data sets. In a nutshell, Hadoop is comprised of a distributed filesystem, and a framework that allows you to execute distributed MapReduce jobs.

Hadoop has been developed for various flavors of Linux, but is created in such a manner that it can actually run on Windows as long as you have a shell that can support running Linux commands and scripts. While I don’t think anyone would argue that you’d want to do this for a large-scale production environment, running Hadoop on Windows allows folks like me who are very comfortable with installing, using and maintaining Windows servers to also work with exciting technologies such as Hadoop.

The purpose of this post is to demonstrate how you can install and configure a Hadoop cluster using Windows Server 2008 R2. In future posts I’ll demonstrate using Hadoop and the Hadoop Filesystem (HDFS) to perform large-scale analytics on unstructured data (which is what it excels at!)

Preparing to Install Hadoop

The first thing you’ll need to do in order to install Hadoop is to prepare a “virgin” Windows Server. While I suppose it’s not really necessary, from my perspective the fewer things that are installed on the server the better. In my case, I’m using Windows Server 2008 R2 with SP1 installed and nothing else. No roles are enabled and no extra software has been installed.

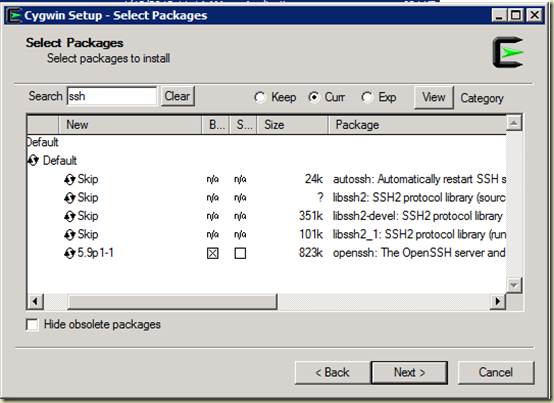

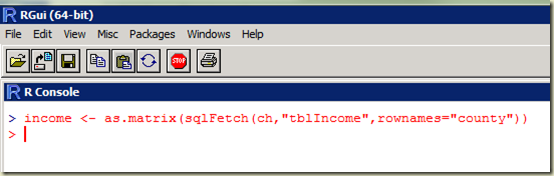

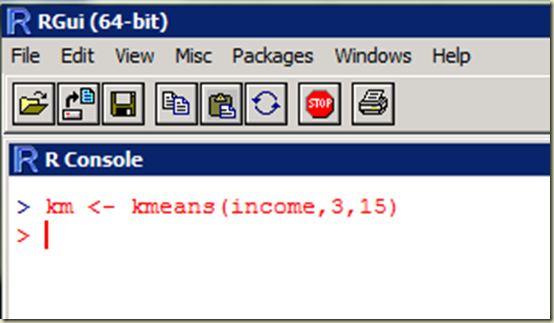

Once you have a server ready (multiple servers if you want to install an actual Hadoop cluster) you’ll want to install Cygwin. If you aren’t familiar, Cygwin is basically a Linux bash shell for Windows. Cygwin is open source and is available as a free download from: http://cygwin.com/install.html Keep in mind that this is just a web installer. To support Hadoop, we will need to ensure that we install the openssh package and it’s associated pre-requisites. In order to do this, start the setup.exe program, select c:\cygwin as the root folder, and then click next. When you get to the screen that asks you to select a package, search for openssl and then click the “skip” (Not exactly intuitive, but it works) text to enable the checkbox for install as shown below:

Once you have selected the openssh library, click next and then answer “Yes” when asked if you want to install the package pre-requisites. Click next and then finish the wizard for install. This will take some time, so be patient.

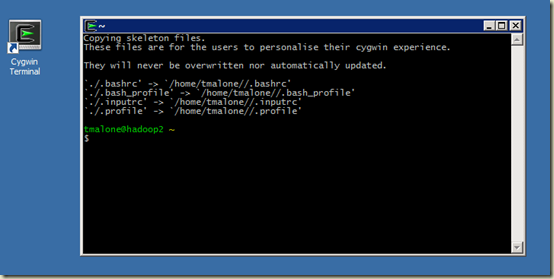

Once the install is complete, you’ll want to start the Cygwin terminal as administrator (right-click on the icon and select “run as administrator”) This will then setup your shell environment as follows:

Once Cygwin is installed and running properly, you’ll need to configure the ssh components in order for the hadoop scripts to execute properly.

Configuring Openssh

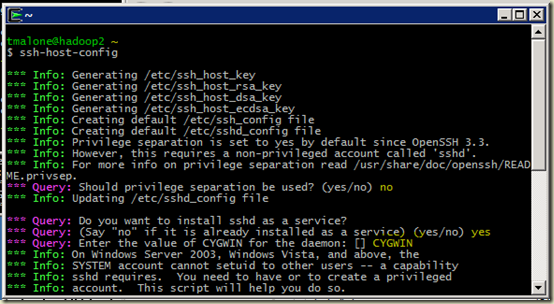

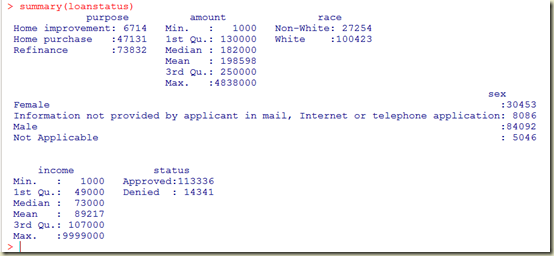

The first step in configuring the ssh server is to run the configuration wizard. Do this by executing ssh-host-config from the cygwin terminal window, which will start the wizard. Answer the questions as follows:

(No to Privilege separation, Yes to install as a service, and CYGWIN as the value)

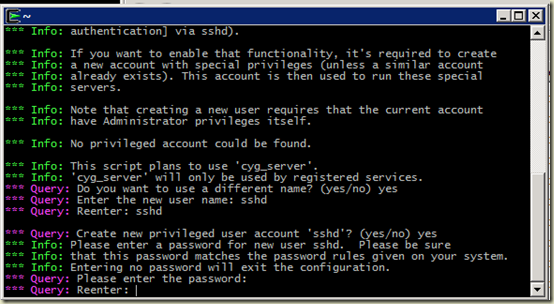

(yes to different name, and sshd for the name, yes to new privileged user, and a password you can remember)

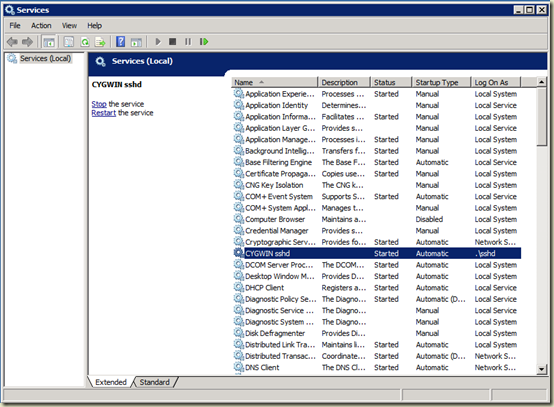

Once the configuration is complete, open the Services control panel (start/administrative tools/services) and right-click on the Cygwin sshd Service and select start. It should start.

If the service doesn’t start, the most likely cause is that the ssh user was not created properly. You can manually create the user and then add it to the service startup and it should work just fine.

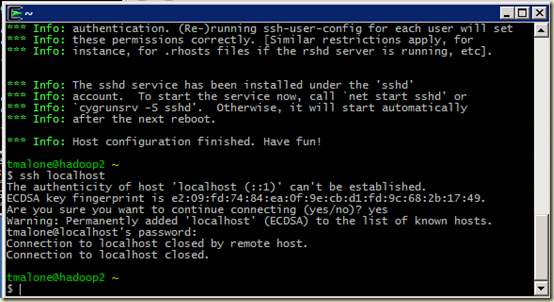

Once the service is started, you can test it by entering the following command in the Cygwin terminal:

ssh localhost

Answer “yes” when prompted about the fingerprint, and you should be ready to go.

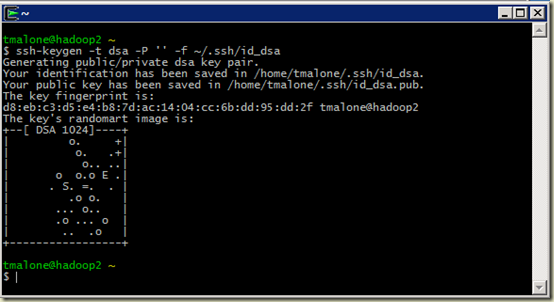

The next step in configuring ssh for use with Hadoop is to configure the authentication mechanisms (otherwise you’ll be typing your password a lot when running Hadoop commands).

Since the Hadoop processes are all invoked via shell scripts and make use of ssh for all operations on the machine (including local operations), you’ll want to generate key-based authentication that can be used so that ssh doesn’t require the use of a password every time it’s invoked. In order to do this, execute the following command in the Cygwin terminal:

ssh-keygen –t dsa –P ‘’ –f ~/.ssh/id_dsa

Once you have the key generated and saved, we’ll need to copy it with the following command:

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

This will take the key you just generated and save it to the list of authorized keys for ssh.

Now that ssh is properly configured, you can move on to installing and configuring Hadoop.

Downloading and installing Hadoop

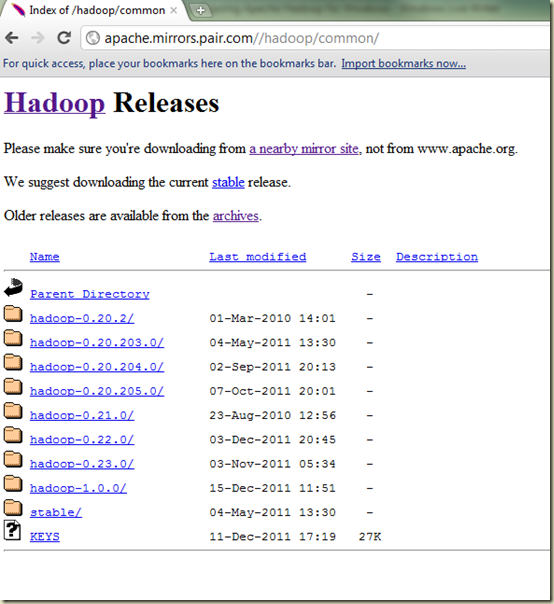

Apache Hadoop is available for download from one of many mirror sites linked from the following page: http://www.apache.org/dyn/closer.cgi/hadoop/common/

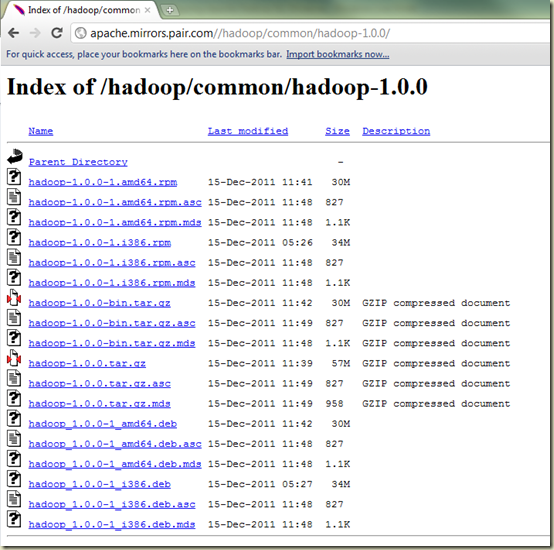

Basically just choose one of the sites, and it should bring you to a page that looks similar to the following figure. Note that I am going to use version 1.0.0 which as of this writing is the latest, but the release train for hadoop sometimes moves pretty fast, so there will likely be more releases available very soon.

Click on the version you want to install (again, I am going to be using 1.0.0 for this post) and then download the appropriate file. In my case, that will be hadoop-1.0.0-bin.tar.gz as shown in the following figure:

Once you have the file downloaded, you’ll want to use a program such as Winrar which can understand the .tar.gz file format.

The actual install process is very simple. You simply open the downloaded file in Winrar (or other program that can understand the format) and extract all files to c:\cygwin\usr\local as shown below:

Once you are done, you’ll want to rename the c:\cygwin\usr\local\hadoop-1.0.0 folder to hadoop. (This just makes things easier as you’ll see once we start configuring and testing hadoop)

You will also need the latest version of the Java SDK installed, which can be downloaded from http://www.java.com (make sure you download and install the SDK and not the runtime, you’ll need the server JVM) . To make things easier, you can change the target folder for the java install to c:\java (although this is not a requirement – I find it easier to use than the default path, as you’ll have to escape all of the spaces and parens when adding this folder to the configuration files)

Configuring Hadoop

For the purposes of this post, I’m just going to configure a single node Hadoop cluster. This may seem counter-intuitive, but the point here is that we get a single node up and running properly and then we can add additional nodes later.

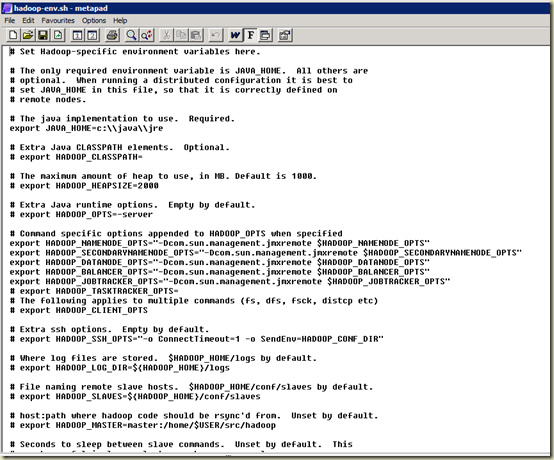

One of the key configuration needs for Hadoop is the location where it can find the Java Runtime. Note that the configuration files we’re going to work with are Unix/Linux files and thus don’t look very good (or work very well) when you use a standard Windows text editor like Notepad. To keep things simple, use a text editor that supports Unix formats such as MetaPad. Assuming that you extracted the Hadoop files as described above and renamed the root folder to Hadoop, open the C:\cygwin\usr\local\hadoop\etc\hadoop\hadoop-env.sh file. Locate the line that contains JAVA_HOME, remove the # in front of it, and replace the folder with the location you installed the Java sdk (in my example, C:\java\jre. (Note that this is a Unix file, so special characters must be escaped with a “\”, so in my case the path is c:\\java\\jre).

This is really all that is required to change in this file, but if you want to know more about the contents you can check out the Hadoop documentation here: http://hadoop.apache.org/common/docs/r0.20.2/quickstart.html (Note that this guide is based on the 0.20.2 release and *not* the 1.0.0 release I detailing here. The docs haven’t quite caught up with the release at the time of this posting)

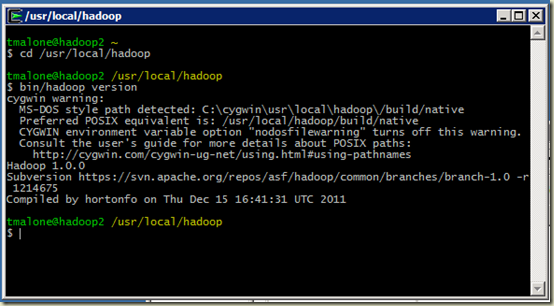

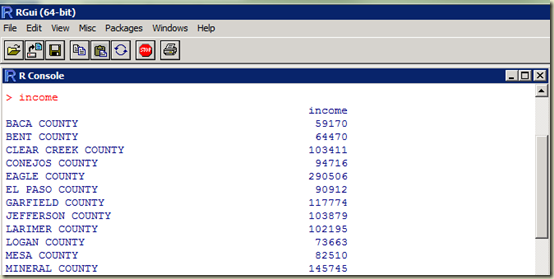

Once you save and close that file, you can verify that Hadoop is properly running by executing the following command inside of Cygwin. (Make sure you change to the /usr/local/hadoop directory first)

bin/hadoop version

You should see a Cygwin warning about MSDOS file paths, and then a version of Hadoop and Subversion located as shown in the figure above. If you do not see a similar output, you likely do not have the path to your Java home set correctly. If you installed Java in the default path, remember that all spaces, slashes and parens must be escaped first. The default path would look like: C:\\Program\ Files\ \(x86\)\\Javaxxxx (you get the idea and probably understand now why I said it would be better to put it in a simple folder)

Once you have the basic Hadoop configuration working, the next step is to configure the site environment settings. This is done via the C:\cygwin\usr\local\hadoop\etc\hadoop\hdfs-site.xml file. Again open this file with MetaPad or a similar editor, and add the following configuration items to the file. Of course replace “hadoop2” with your host name as appropriate:

<?xml version="1.0" encoding="utf-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://hadoop2:47110</value>

</property>

<property>

<name>mapred.job.tracker</name>

<value>hadoop2:47111</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

This basically configures the local host (in my case it’s named hadoop2) filesystem and job tracker, and sets the dfs replication to 2 blocks. You can read about this file and it’s values here: http://wiki.apache.org/hadoop/HowToConfigure (again remember that the docs are outdated for my particular installation, but they still work)

We will also need to configure the mapred-site.xml file to specify the configuration for the mapreduce service:

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>hadoop2:8021</value>

</property>

</configuration>

This basically configures the mapreduce job tracker to use port 8021 on the local host. There is a LOT more to both of these configuration files, I’m only presenting the basics to get things up and running here.

Now that we have the basic environment setup, we need to format the HDFS filesystem that Hadoop will use. In the configuration file above, I did not specify a location for the DFS files. This means that they will be stored in /tmp. This is OK for our testing and for a small cluster, but for production systems you’ll want to make sure that you specify the location by using the dfs.name.dir and dfs.data.dir configuration items (which you can read about in the link I provided above).

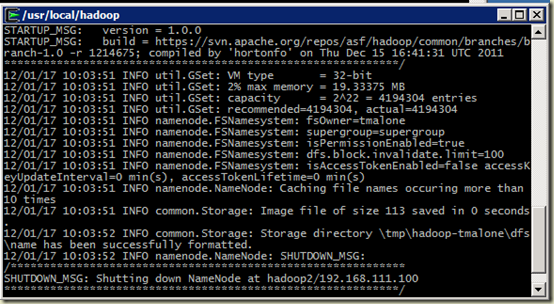

To format the filesystem, enter the following command in cygwin:

bin\hadoop namenode –format

If you have properly configured the hdfs-site xml file, you should see output that is similar to the above. Note in my case that the default folder location is /tmp/hadoop-tmalone/dfs/name. Remember this directory name as you will need it later.

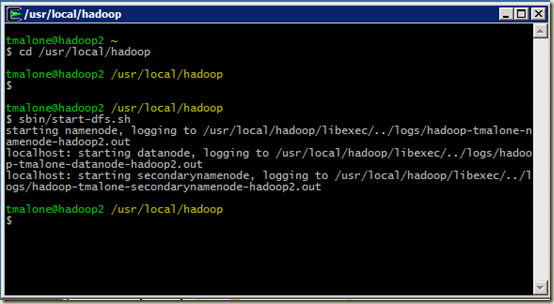

Now that we have the configuration in place and the filesystem formatted, we can start the Hadoop subsystems. The first thing we’ll want to do is start the DFS subsystem. Do this with the following command in the Cygwin terminal:

sbin/start-dfs.sh

This should take a few moments, and you should see output as shown in the figure above. Note that the logs are stored in /usr/local/hadoop/logs. You can verify that DFS is running by examining the namenode log:

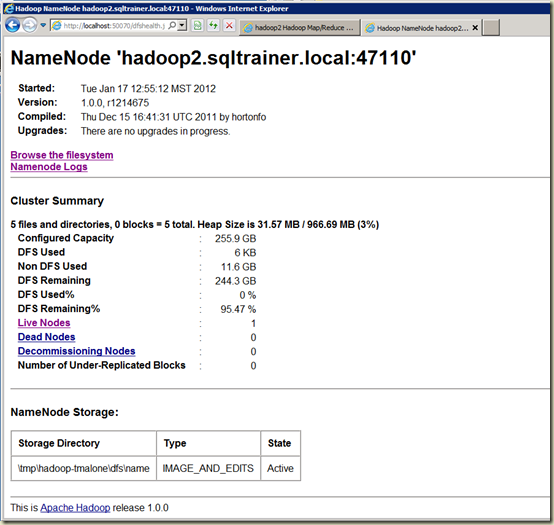

You can also verify that the DFS service is running by checking the monitor (assuming you used the ports as I described them in the hdfs-site xml configuration above). To check the monitor, open a web browser and navigate to the following site:

If the DFS service is properly running, you will see a status screen like the above. If you get an error when attempting to open that page, it is likely that DFS is not running and you will need to check the log file to determine what has gone wrong. Most common problem is a misconfiguration of the site-xml file, so double check that the file is correct.

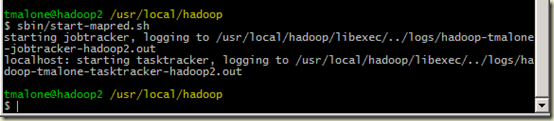

Once DFS is up and running, you can start the mapreduce process as follows:

sbin/start-mapred.sh

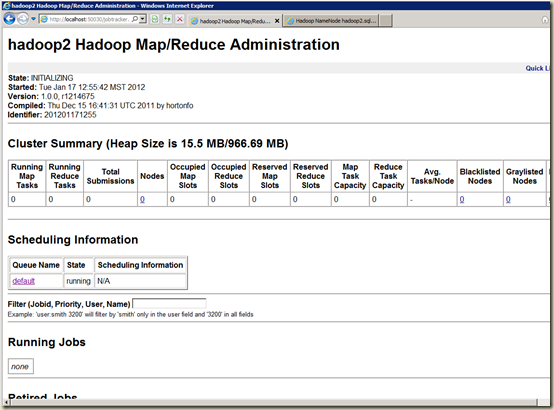

You can test that the Mapreduce process is running by checking the monitor. Open a web browser and navigate to:

Now we have success! We’ve installed and configured Hadoop, formatted the DFS filesystem, and started the basic processes necessary to use the power of Hadoop for processing!

Testing the installation

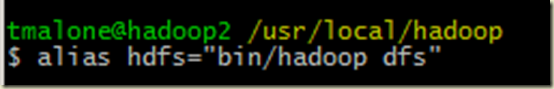

Each Hadoop distribution comes with a set of samples that can be used to very that the system is functional. The samples are stored in Java “jar” files and are located in the hadoop/share/hadoop directory. One simplistic test would be to copy some text files into DFS and then use the sample mapreduce job to enumerate them. First though, you will likely want to setup an alias to make entering the commands a little easier. In my case, I will alias the hadoop dfs command to simply “hdfs”. Do accomplish this, type the following command in the Cygwin terminal window:

alias hdfs=”bin/hadoop dfs”

For the first part of our test, we will copy the configuration files from the hadoop directory into DFS. In order to do this, we will use the dfs –put command (for more information on the put command, see the docs here: http://hadoop.apache.org/common/docs/r0.20.0/hdfs_shell.html (again remember that the docs are a little behind)

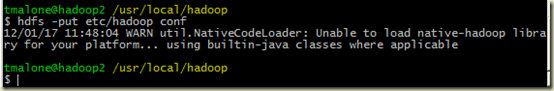

In the Cygwin terminal window (still in the /usr/local/hadoop directory) execute the following command:

hdfs –put etc/hadoop conf

This will load all of the files in the /usr/local/hadoop/etc/hadoop directory into HDFS in the conf folder. Since the conf folder doesn’t exist, the –put command will create it. Note that you receive a warning about the platform, but that is OK the files will still copy.

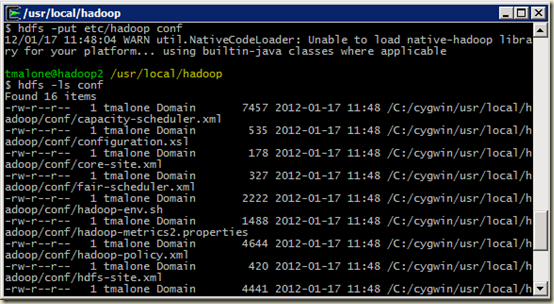

You can verify that the files were copied by using the dfs –ls command as follows:

hdfs –ls conf

Now that we are sure the files are stored in HDFS, we can use one of the examples that is shipped with Hadoop to analyze the text in the files for a certain pattern. The samples are located in the /usr/local/hadoop/share/hadoop folder, and it’s easiest to change to that folder and execute the sample there. In the Cygwin terminal, execute the following command:

cd /usr/local/hadoop/share/hadoop

Once we’re in the folder, we can run a simple IO test to determine how well our cluster DFS IO will perform. In my case, since I’m running this on a VM with a slow disk, I don’t expect much out of the cluster, but it’s a very nice way to test to see that DFS is indeed functioning as it should. Execute the following command to test DFS IO:

../../bin/hadoop jar hadoop-test-1.0.0.jar testDFSIO –write –nrFiles 10 –filesize 1000

If you don’t see any exceptions in the output, you have successfully configured Hadoop and the DFS cluster is operational.

Conclusion

While it isn’t exactly a simple process, you can indeed get Apache Hadoop up and running on a Windows platform. I’ve taken the path of configuring Hadoop with Cgwin as the shell, but there are those who claim to have installed and configured Hadoop on windows without the use of Cygwin. Either way, I’m just glad it works and folks who don’t want to invest in building a Linux environment have the ability to play around with and use Hadoop.