Statistical Analysis with R and Microsoft SQL Server 2012

It’s been awhile since I’ve written a blog post, but that doesn’t mean that I haven’t been thinking about things to write about and discuss here. Recently, I had the opportunity to attend an alpha delivery of EMCs “Data Science and Big Data Analytics” course (Read about the course on EMC Education Services site here: http://education.emc.com/guest/campaign/data_science.aspx ) and was really taken by a couple of points that the course brought home:

1) There’s much more to statistical analysis than I had ever thought about. (Being a Microsoft SQL Server and Microsoft BI Stack kinda guy, I always figured that you needed Excel and SSAS to do real statistical analysis.

2) Big Data Analytics is a really cool technology discipline!

The course itself was based on the EMC Greenplum Database (Community Edition, which you can download and use for free!) which is an amazing piece of technology (I am very impressed with it’s feature/functionality and integration with things like Hadoop for real parallel computing capabilities) as well as the open source “R” statistical analysis language.

While it’s true that my role at EMC means that I focus more on the non-Microsoft stack these days, that doesn’t stop me from thinking about how I can apply things that I lean to the Microsoft platform. With that in mind, one of the things that I kept coming back to in the class was, “How would I do this using SQL Server?" As it turns out using R with SQL Server isn’t all that difficult, and it really does open up an entirely new way of thinking about statistical analysis (for me anyway)

R and Statistical Analysis

R is an open source “software environment” that is used primarily for statistical analysis. A huge part of “Data Science” is of course statistical analysis, so the two go hand-in-hand. One very cool aspect of R is the fact that the graphics environment is “built in” (I put that in quotes because R is very modular and requires you to load packages for just about anything you do, although a basic “plot” command is included in the base distribution) and allows you not only to analyze data, but also visualize it “on the fly” as well. You can read about (and download) R from the main website here: http://www.r-project.org/. If you are really interested in R, you should make a point of reading the R Journal here: http://journal.r-project.org/current.html

One thing that is very clear though about R is that it’s a “data source agnostic” environment, but many of the examples that use data either deal with flat files or connect to open source databases like MySQL or Postgres. This of course doesn’t mean you can’t use R with SQL Server, it just means you have to dig a little deeper and understand how to connect the R environment to your SQL Server database.

R and SQL Server

Once you download and install the R environment (the screen shots and examples I provide here will be from the Windows version of RGUI version 2.14.0 which I downloaded from the UC Berkeley mirror here: http://cran.cnr.berkeley.edu/ ) you will need to install the RGUI environment and decide whether you will use the 32 or 64-bit client. This is a very important distinction, since R connects to databases via ODBC, and ODBC drivers are very platform (32 versus 64 bit) specific.

In my case, I am going to use the 64 bit GUI and will be using SQL Native Client 11 to connect to SQL Server 2012. (There is no specific reason for me to use SQL Server 2012 here, other than I’ve been playing around with the release candidate and my development environment is all setup for it) I have a large database that I use for “big data” type demonstrations that also works well for statistical analysis work. I will likely write another article on how this database was constructed, but know that the data is very real world (it is built from 2010 data collected via the US Home Mortgage Disclosure Act) and well-suited for testing statistical analysis theories and data mining.

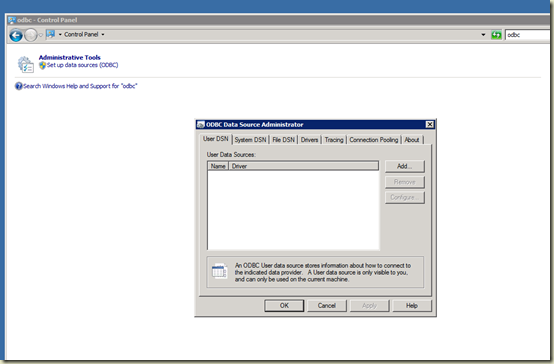

Once you decide what client you will be using, you will need to configure an ODBC DSN (I decided to use a System DSN for my work, so I’ll walk though the creation of that DSN) to connect to your database. To create a new DSN, use the platform-specific version of the ODBC control panel (for Windows Server 2008 R2, simply go to control panel and search for “ODBC”, you will then see the “Set up data sources (ODBC)” as shown in the following figure:

Click the “System DSN” tab, and then click the Add button and walk through the wizard to connect to your database. Remember the name of the DSN you create, as you’ll need to specify it from within R in order to connect. In my case, the DSN is named “HMDAData”.

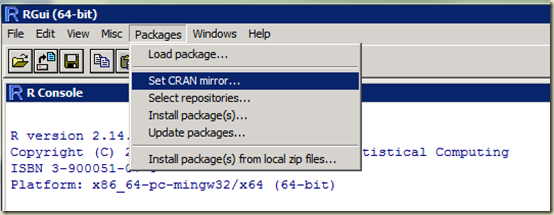

In order to use the ODBC connection within R, you’ll need to download the “RODBC” library, which can be found here: http://cran.cnr.berkeley.edu/web/packages/RODBC/index.html. Select the appropriate zip file and download it to a folder on the machine where you installed R. Once it is downloaded, from within the RGUI, select Packages, and then select “Install packages from local ZIP file” as shown in the following figure:

Point to the zip file you just downloaded and R will install the appropriate package and make it available. Once it is available, you can connect to SQL Server by using the following R commands as shown in the figure:

Note: R is case sensitive for most operations. Also note that I am entering the commands directly into the R console. Another way to do this is to use the File command and create a new R script, and submit commands from the script to the console. I’ll show this in later posts. Also note that assignment is done in R by using “<-“, this line is basically saying, “assign an object named ‘ch’ to the output of the odbcConnect function that has been passed the value ‘HMDA’”. This will make more sense as you get into R more. What I have done with these commands is load the RODBC library and create a “channel” object that I will use to query SQL Server.

Data Mining with R

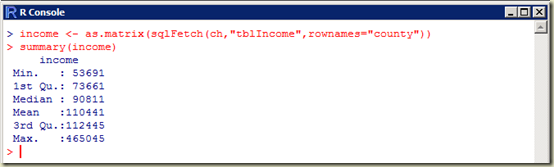

In my database, I have a table named “tblIncome” that has 2 columns. Each row is a county in Colorado and the average salary of all people who have applied for a home loan in 2010 within that county. If I wanted to find some “clusters” of salaries within Colorado and see how the income among potential home buyers/refinancers is grouped together, I would take the data and apply K-means clustering techniques to identify the clusters. Normally I’d use SSAS Data Mining, or maybe Excel with the Predixion add-in, but now thanks to R, I can do that analysis directly within R.

The first step is to obtain the data from the SQL Server table and load it into a matrix in R. This can be accomplished using the following command:

The “sqlFetch” command simply attaches to a table and does a SELECT * from that table. The “as.matrix” ensures that the data is loaded into a matrix that matches the table structure. You can get a summary of the data with the following command:

If you just want to see what the income object looks like, you can issue the following command:

Now that we have the data loaded into a matrix, we should sort it to make it easier to cluster. Issue the sort command as follows:

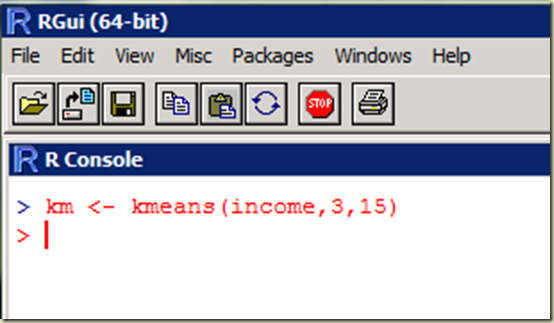

Now that we have the matrix loaded and sorted, we can feed it into the kmeans clustering algorithm. As a note here, anything that you want help with in R you can simply use the ? followed by the command. For example, issue ? kmeans to read all about the kmeans command. For the purposes of this blog entry, I’m just going to use the default algorithm and I’m going to make a guess at 3 clusters to start with and iterate 15 times. I’ll assign the output to the object “km”. The command looks like:

If you want to know what the km object contains, you can issue the following command:

Note that the output has given us 3 clusters with means at 83K, 371K and 161K. We also can see that the object contains various components. To statisticians, this information is very easy to understand, but if you’re like me you probably want to visualize the data. Since I am interested in seeing the cluster associations, I can plot the cluster component. I can use the following command to create the plot:

The command generates a plot graph that looks like this:

The colors represent our clusters. Since I just guessed that 3 income clusters would be appropriate, the graphic is likely not a very good representation of true income clusters. In order to determine what the true number of clusters should be, I can take the income matrix and compute the sum of squares of each group and determine how many clusters I should have. (You can read about this at http://www.statmethods.net/advstats/cluster.html )

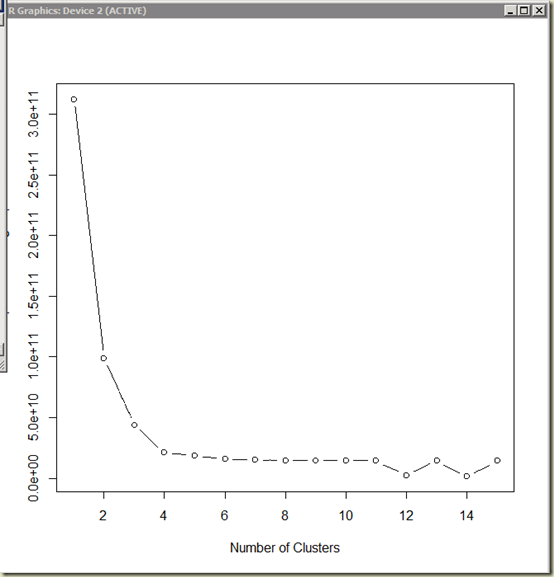

R has the capability of creating loops, so we can iterate through the matrix and plot the resulting sum of squares within the group. We can then plot the results and look for an “elbow” to determine how many clusters would be appropriate with the data that we have. You can accomplish this with the following command:

which generates the following plot:

which tells us that the appropriate number of clusters is 4.

Conclusion

The intent of this post wasn’t to teach you how to perform statistical analysis using k-means clustering, but rather to demonstrate how some very advanced statistical analysis can be performed from SQL Server data and R without SSAS modeling or advanced Excel use.

Since I am spending a lot of time in the Data Science discipline, I will be posting a lot of R examples using SQL Server data.

No comments:

Post a Comment